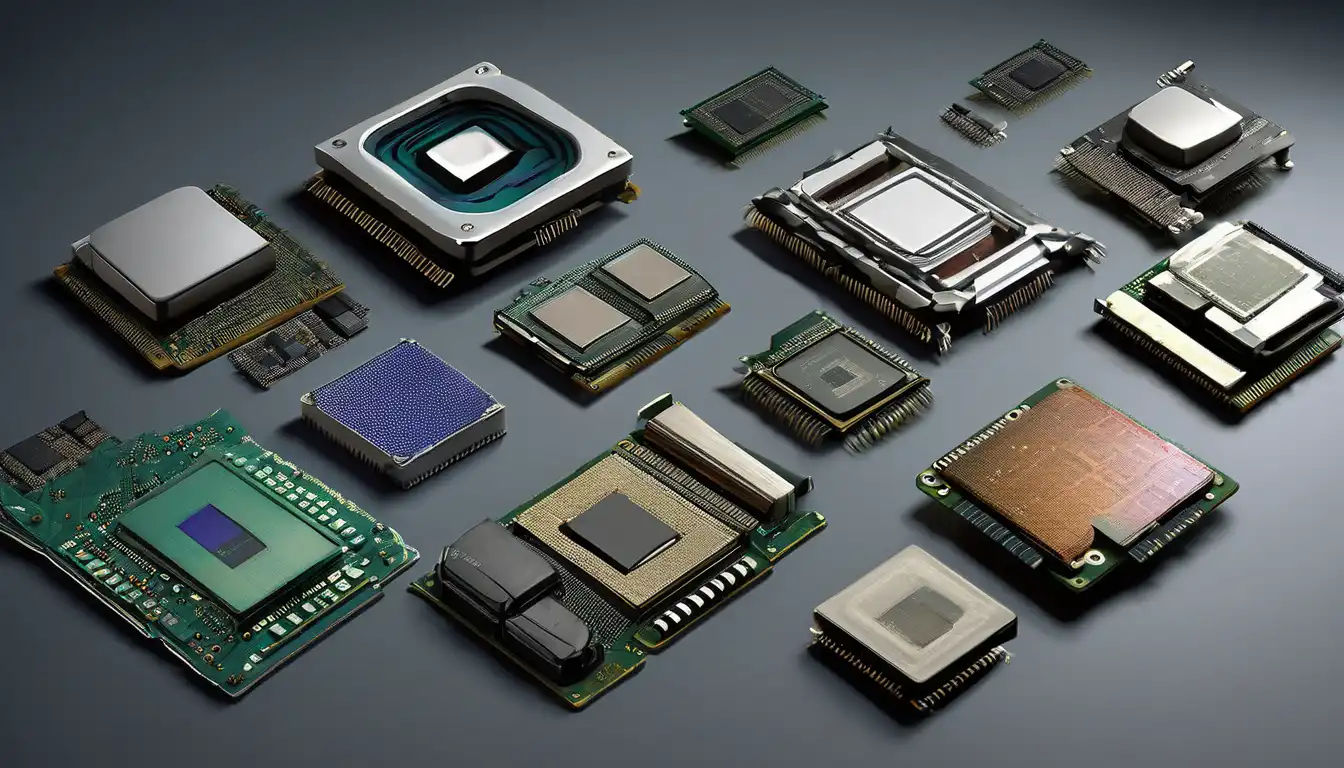

The Dawn of Computing: Early Processor Beginnings

The evolution of computer processors represents one of the most remarkable technological journeys in human history. Beginning with primitive vacuum tube systems in the 1940s, processors have undergone exponential growth in both capability and complexity. The first electronic computers, such as ENIAC, utilized thousands of vacuum tubes to perform basic calculations that modern processors accomplish in nanoseconds. These early systems were massive, consuming entire rooms while delivering processing power that pales in comparison to today's smartphones.

During the 1950s, the invention of the transistor revolutionized processor design. Transistors replaced bulky vacuum tubes, making computers smaller, more reliable, and more energy-efficient. This transition marked the beginning of what would become known as Moore's Law, though the concept hadn't yet been formally articulated. The IBM 700 series and other mainframe computers of this era demonstrated the potential of transistor-based processing, paving the way for the integrated circuit revolution that would follow.

The Integrated Circuit Revolution

The 1960s witnessed the birth of the integrated circuit (IC), which allowed multiple transistors to be fabricated on a single silicon chip. Jack Kilby and Robert Noyce independently developed the first working integrated circuits, fundamentally changing processor manufacturing. This innovation led to the creation of the first microprocessors in the early 1970s, with Intel's 4004 processor representing a watershed moment in computing history.

The 4004, released in 1971, contained 2,300 transistors and operated at 740 kHz – specifications that seem almost prehistoric by today's standards. However, this 4-bit processor demonstrated that complex computational tasks could be performed by a single chip. The success of the 4004 prompted rapid development, leading to the 8-bit Intel 8008 and eventually the legendary 8080 processor, which became the foundation for many early personal computers.

Key Milestones in Early Processor Development

- 1947: Invention of the transistor at Bell Labs

- 1958: First integrated circuit developed

- 1971: Intel 4004 – the first commercial microprocessor

- 1974: Intel 8080 sets new standards for performance

- 1978: Introduction of 16-bit processing with Intel 8086

The Personal Computer Era and x86 Dominance

The 1980s marked the beginning of the personal computer revolution, driven largely by Intel's x86 architecture. The Intel 8088 processor, chosen by IBM for their first PC, established a standard that would dominate personal computing for decades. This era saw fierce competition between Intel, AMD, and other manufacturers, each pushing the boundaries of processing power while maintaining compatibility with the growing software ecosystem.

As personal computers became more prevalent, processor manufacturers focused on increasing clock speeds and improving architecture. The transition from 16-bit to 32-bit processing in the mid-1980s with processors like the Intel 80386 enabled more sophisticated operating systems and applications. This period also saw the rise of reduced instruction set computing (RISC) architectures, which offered alternative approaches to processor design that would influence future developments.

The 1990s brought unprecedented growth in processor performance, with Intel's Pentium series becoming household names. The "megahertz wars" between Intel and AMD pushed clock speeds from tens of megahertz to multiple gigahertz by the decade's end. Meanwhile, parallel processing and superscalar architectures allowed processors to execute multiple instructions simultaneously, dramatically improving performance without solely relying on clock speed increases.

The Multi-Core Revolution and Modern Architectures

The early 2000s marked a fundamental shift in processor design philosophy. As physical limitations made further clock speed increases impractical due to power consumption and heat generation issues, manufacturers turned to multi-core designs. Instead of making single cores faster, processors began incorporating multiple processing cores on a single chip. This parallel approach allowed for continued performance improvements while managing power efficiency.

Intel's Core 2 Duo and AMD's Athlon 64 X2 processors demonstrated the viability of multi-core computing for mainstream users. Software developers gradually adapted to this new paradigm, optimizing applications to take advantage of parallel processing capabilities. The multi-core revolution continues today, with consumer processors now featuring up to 64 cores in high-end desktop systems.

Modern Processor Innovations

- Heterogeneous Computing: Combining different types of cores for optimal performance and efficiency

- AI Acceleration: Dedicated hardware for machine learning tasks

- 3D Stacking: Vertical integration of components for improved density

- Advanced Node Processes: Shrinking transistor sizes to atomic scales

Specialized Processors and the Future of Computing

Today's processor landscape is characterized by specialization and diversification. While general-purpose CPUs continue to evolve, we're seeing increased adoption of specialized processors designed for specific workloads. Graphics processing units (GPUs) have evolved from simple display controllers to powerful parallel processors capable of handling complex computational tasks. Field-programmable gate arrays (FPGAs) and application-specific integrated circuits (ASICs) provide customized processing solutions for specialized applications.

The rise of artificial intelligence and machine learning has driven development of neural processing units (NPUs) and tensor processing units (TPUs), designed specifically for AI workloads. Meanwhile, quantum computing represents the next frontier in processor evolution, promising to solve problems that are intractable for classical computers. While still in early stages, quantum processors demonstrate the continuing innovation in computational technology.

Looking ahead, processor evolution will likely focus on several key areas. Neuromorphic computing, which mimics the structure and function of biological brains, offers potential breakthroughs in energy efficiency and cognitive computing. Photonic processors, using light instead of electricity, could revolutionize data transfer speeds and power consumption. As we approach physical limits of silicon-based computing, new materials like graphene and carbon nanotubes may enable next-generation processors.

Conclusion: The Unstoppable March of Progress

The evolution of computer processors represents one of technology's greatest success stories. From room-sized vacuum tube systems to nanometer-scale multi-core chips, processor development has followed an exponential growth curve that has transformed society. Each generation has built upon the innovations of the previous, driving improvements in performance, efficiency, and capability that have enabled the digital revolution.

As we look to the future, the lessons from processor evolution remain relevant: innovation often comes from rethinking fundamental assumptions, collaboration drives progress, and technological limitations frequently inspire creative solutions. The journey from simple calculating machines to intelligent systems capable of artificial intelligence demonstrates humanity's remarkable capacity for technological advancement. The next chapters in processor evolution promise to be just as transformative as those that have come before.